Students

| Shaheer Afridi | "Medical Imaging Segmentation through State-of-the-art Deep Learning Technique using 3D volumetric Data" |

| Utsab Chalise | "Generating Brain MRI Images for Spatial and Volumetric Upsampling Using Diffusion Techniques" |

| Akintade Egbetakin | "Computer Vision and Deep Learning for the Detection of COVID-19, Pneumonia, and Tuberculosis using Chest X-ray Images" |

| Efoma Ibude | "Satellite Image Super Resolution Using GAN Techniques" |

Collaborators

|

Prof Fabio Cuzzolin Oxford Brookes University, UK |

Dr George Mylonas Imperial College London, UK |

|

Dr Shailza Sharma University of Leeds, UK |

Datasets

|

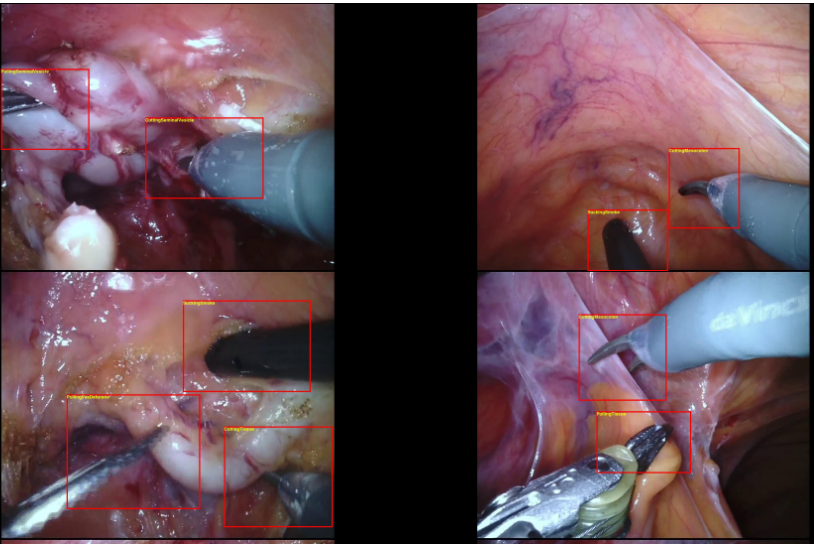

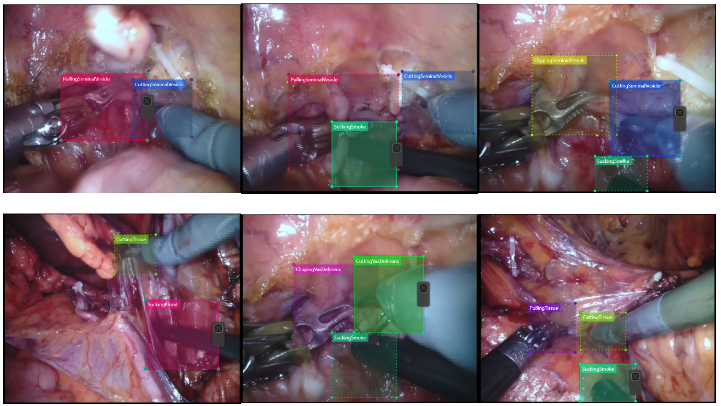

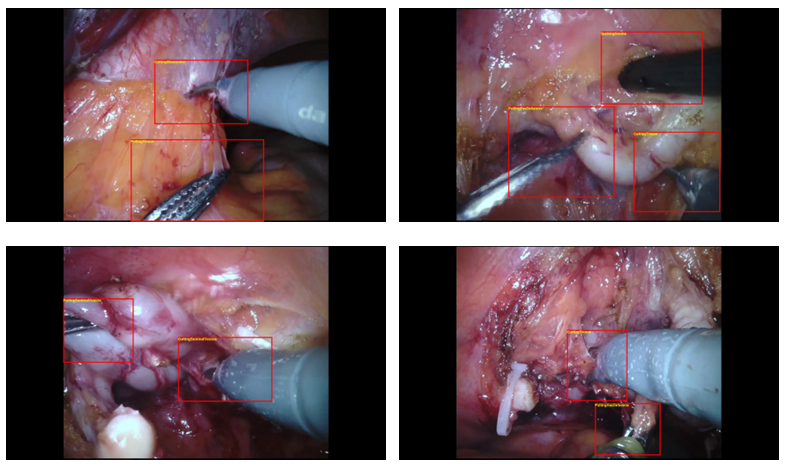

The SARAS-ESAD dataset is a surgical action detection dataset created to develop AI systems to assist surgeons during minimally invasive surgery, specifically Robotic-Assisted Radical Prostatectomy (RARP) procedures. The dataset is designed to recognize and localize the actions performed by surgeons in RARP videos. It contains 21 action classes. Each annotated frame has one or more action instances. The dataset is divided into training, validation, and test sets. The training data contains over 22,000 frames with annotations for over 28,000 actions. The dataset was released for the SARAS-ESAD challenge and can be downloaded from the challenge website. |

|

The SARAS-MESAD dataset expands the SARAS-ESAD dataset for surgeon action detection in Robotic-Assisted Radical Prostatectomy (RARP) surgery. MESAD consists of two subsets: MESAD-Real and MESAD-Phantom. MESAD-Real contains videos of real RARP surgeries on patients, while MESAD-Phantom includes videos of procedures performed on training phantoms with anatomically similar structures. The main objective of this dataset is to explore the possibility of using artificial phantoms to improve the performance of surgeon action detection on real patient data. For more details and to download the dataset, please visit the challenge website. |

Softwares

|

This code implements a surgeon action detection model using a Feature Pyramid Network (FPN) architecture. The FPN leverages a convolutional neural network (CNN) with pooling layers to create a rich hierarchy of features for accurate action recognition. A powerful ResNet serves as the model's backbone, extracting features from various stages within the surgical video. These multi-level features are then processed to predict both the type of action (class label) and the bounding box location for the detected surgeon actions. To prevent overfitting and improve generalization, we employ a range of data augmentation techniques alongside freezing specific layers during training. |

|

Semantic segmentation of surgical scenes is a very complex and challenging task. Two key factors contribute to this difficulty: the deformable nature of organs and the close viewpoint of the endoscope camera. Organs can change shape significantly throughout surgery, and the limited field of view from the close-up camera means even minor movements of the endoscope can drastically alter the scene's structure. To address these challenges, we have developed a segmentation model specifically for semantic understanding of surgical scenes. |

Selected Publications

|

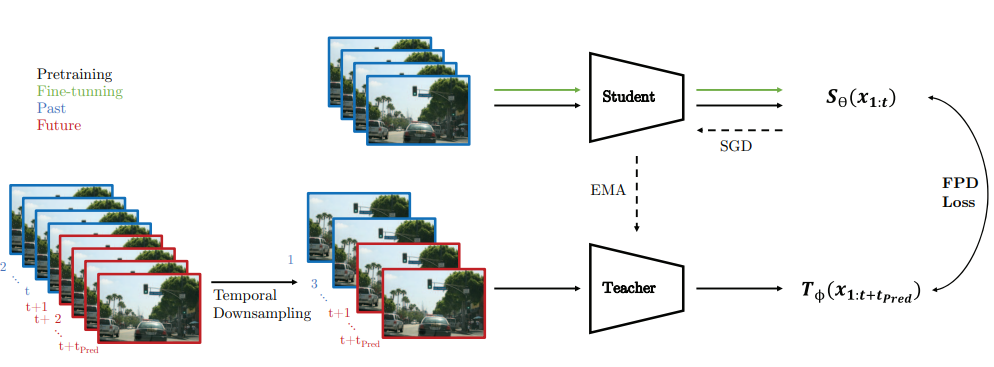

Temporal DINO: A Self-supervised Video Strategy to Enhance Action Prediction Izzeddin Teeti, Rongali Sai Bhargav, Vivek Singh, Andrew Bradley, Biplab Banerjee, Fabio Cuzzolin IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), 2023 [link] |

|

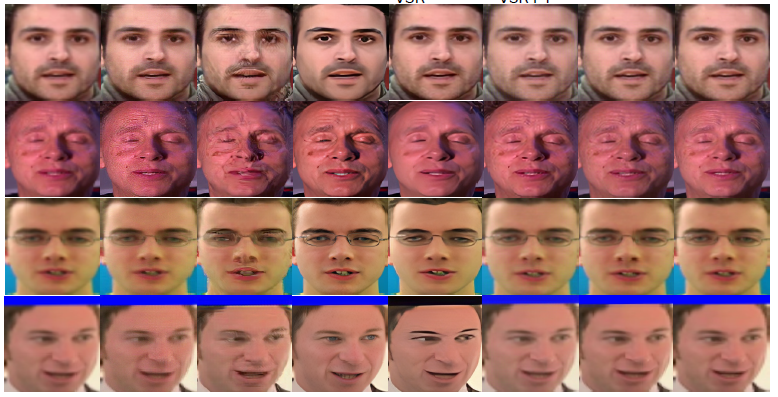

Dual Stage Semantic Information Based Generative Adversarial Network For Image Super-Resolution Shailza Sharma, Abhinav Dhall, Dr Vinay Kumar, Vivek Singh Fourteenth Indian Conference on Computer Vision, Graphics and Image Processing (ICVGIP), 2023 [link] |

|

The saras endoscopic surgeon action detection (esad) dataset: Challenges and methods Vivek Singh Bawa, Gurkirt Singh, Francis KapingA, Inna Skarga-Bandurova, Elettra Oleari, Alice Leporini, Carmela Landolfo, Pengfei Zhao, Xi Xiang, Gongning Luo, Kuanquan Wang, Liangzhi Li, Bowen Wang, Shang Zhao, Li Li, Armando Stabile, Francesco Setti, Riccardo Muradore, Fabio Cuzzolin ArXiv, 2021 [pdf] |

|

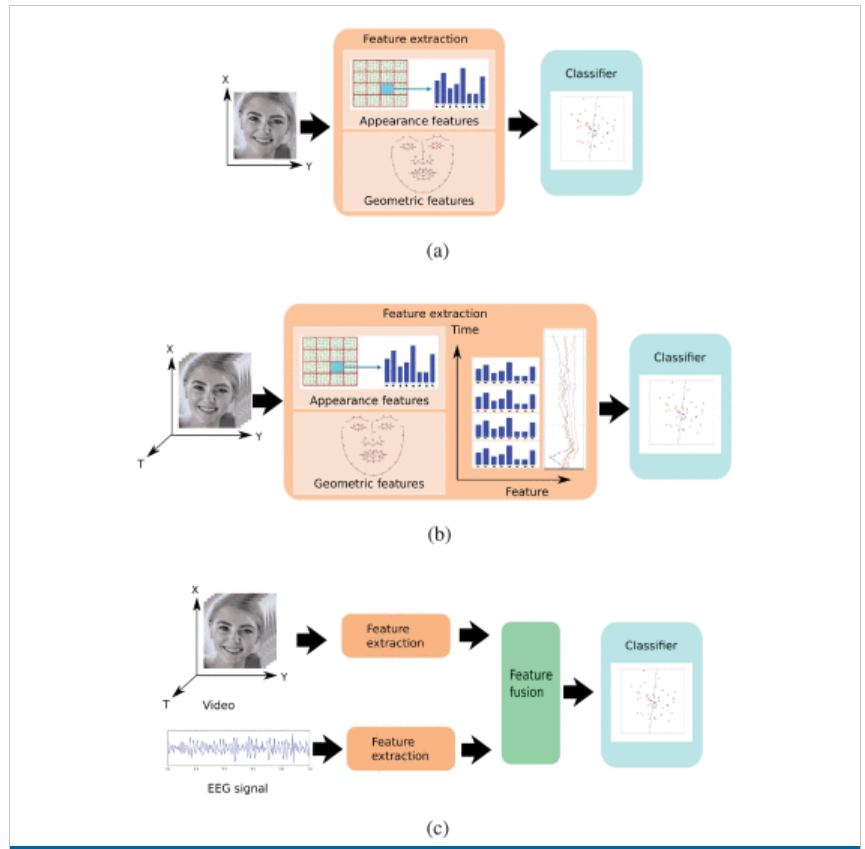

An automatic multimedia likability prediction system based on facial expression of observer Vivek Singh Bawa, Shailza Sharma, Mohammed Usman, Abhimat Gupta, Vinay Kumar IEEE Access, 2021 [link] |

|

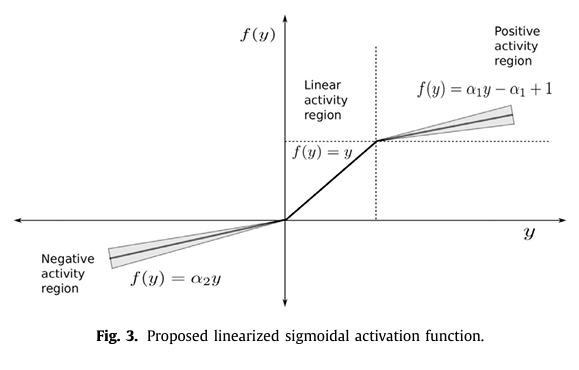

Linearized sigmoidal activation: A novel activation function with tractable non-linear

characteristics to boost representation capability Vivek Singh Bawa and Vinay Kumar Expert Systems with Applications, 2019 [link] |